sharemyimage

Chevereto Member

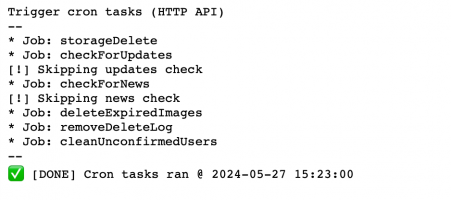

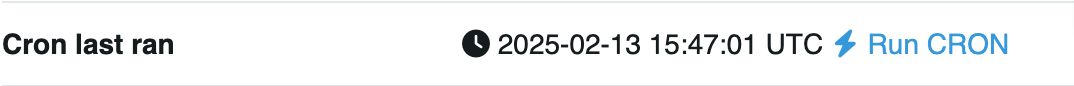

Cron job is not working in 4.1.4 when I am trying to manully run it from the dashboard its says

Is anyone else encountering a similar issue?

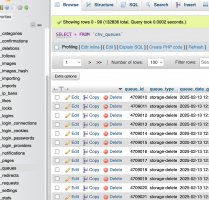

What could be the SQL query to clear the queues to remove the images?

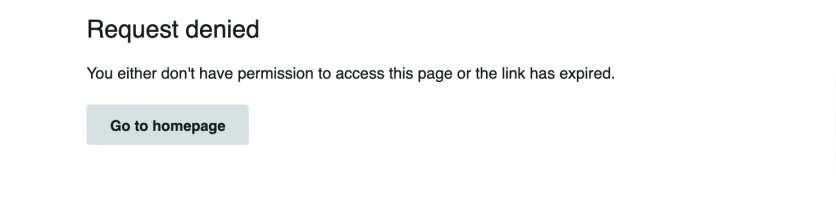

Request denied

You either don't have permission to access this page or the link has expired.Is anyone else encountering a similar issue?

What could be the SQL query to clear the queues to remove the images?